Many will be familiar with the term bot, short for web-robot. Bots are essential for effective operation of the web: web-crawlers are a type of bot, automatically trawling sites looking for updates and making sure search engines know about new content. To this end, web site owners need to allow access to bots, but they can (and should) lay down rules. The standard here is to have a file associated with any web server called robots.txt that the owners of good bots should read and adhere too. However, not all bots are good; bad bots can just ignore the rules! Most will also have heard of botnets, arrays of compromised users devices and/or servers that have illicit background tasks running to send spam or generate high volumes of traffic that can bring web servers to their knees through DDoS (distributed denial of service) attacks. A Quocirca research report, Online Domain Maturity, published in 2014 and sponsored by Neustar (a provider of DDoS mitigation and web site protection/performance services), shows that the majority of organisations say they have either permanent or emergency DDoS protection in place, especially if they rely on websites to interact with consumers. However, Neustar’s own March 2015, EMEA DDoS Attacks and Protection Report, shows that in many cases organisations are still relying on intrusion prevention systems (IPS) or firewalls rather than custom DDoS protection. The report, which is based on interviews with 250 IT managers, shows that 7-10% of organisations believe they are being attacked at least once a week. Other research suggests the situation may actually be much worse than this, but IT managers are simply not aware of it. Corero (another DDoS protection vendor) shows in its Q4 2014 DDoS Trends and Analysis report, which uses actual data regarding observed attacks, that 73% last less than 5 minutes. Corero says these are specifically designed to be short lived and go unnoticed. This is a fine tuning of the so-called distraction attack. Arbor (yet another DDoS protection vendor) finds distraction to be the motivation for about 19-20% of attacks in its 2014 Worldwide Infrastructure Security Report. However, as with Neustar, this is based on what IT managers know, not what they do not know. The low level, sub-saturation, DDoS attacks, reported by Corero are designed to go unnoticed but disrupt IPS and firewalls for just long enough to perpetrate a more insidious targeted attack before anything has been noticed. Typically it takes an IT security team many minutes to observe and respond to a DDoS attack, especially if they are relying on an IPS. That might sound fast, but in network time it is eons; attackers can easily insert their actual attack during the short minutes of the distraction. So there is plenty of reason to put DDoS protection in place (other vendors include Akamai/Prolexic, Radware and DOSarrest ). However, that is not the end of the bot story. Cyber-criminals are increasingly using bots to perpetrate another whole series of attacks. This story starts with another, sometimes, legitimate and positive activity of bots – web scraping; the subject of a follow on blog – The rise and rise of bad bots – part 2 – beyond web scraping. Source: http://www.computerweekly.com/blogs/quocirca-insights/2015/04/the-rise-and-rise-of-bad-bots.html

Continued here:

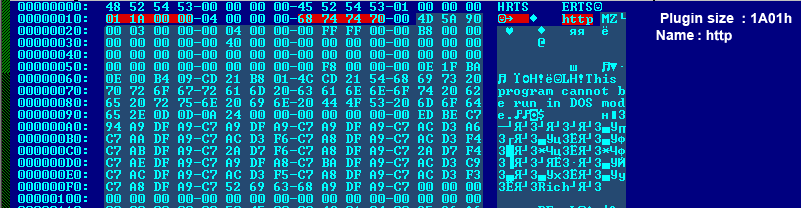

The rise and rise of bad bots – little DDoS