When a blog that typically attracts 30,000 visitors a day is hit with 5.35 million, its operators had better have been prepared for what seems way too big to be called a spike. The popular SCOTUSblog, which provides news and information about the United States Supreme Court, was put to this test last week after the historic healthcare ruling and it passed with flying colors, thanks to months of planning and a willingness to spend $25,000. “We knew we needed to do whatever it took to make sure we were capable of handling what we knew would be the biggest day in this blog’s history,” says Max Mallory, deputy manager of the blog, who coordinates the IT. The massive traffic spike was somewhat of a perfect storm for SCOTUSblog, which Supreme Court litigator Tom Goldstein of the Washington, D.C., boutique Goldstein & Russell founded in 2002. Not only is the site a respected source of Supreme Court news and information, but in the days leading up to the ruling, buzz about the blog itself began picking up. President Barack Obama’s press secretary named SCOTUSblog as being one source White House officials would monitor to hear news from the court. When the news broke, two of the first media organizations to report it — Fox News and CNN — got the ruling wrong. Many media outlets cited SCOTUSblog as being the first to correctly report that the Supreme Court upheld the Affordable Care Act in a 5-4 decision. But even before “decision day,” as Mallory calls it, the small team at SCOTUSblog knew Thursday would put a lot of strain on the blog’s IT infrastructure. The first indications came during the health care arguments at the Supreme Court in March, when SCOTUSblog received almost 1 million page views over the three days of deliberations. The blog’s single server at Web hosting company Media Temple just couldn’t handle the traffic. “That was enough to crash our site at various points throughout those days and it just generally kept us slow for a majority of the time the arguments were going on,” Mallory says. In the weeks leading up to the decision, Mallory worked with a hired team of developers to optimize the website’s Java code, install the latest plugins and generally tune up the site. Mallory realized that wouldn’t be enough, though. No one knew for sure when the high court would release the most anticipated Supreme Court case in years, but each day it didn’t happen there was a greater chance it would come down the next day. Traffic steadily climbed leading up to the big day: The week before the ruling the site saw 70,000 visitors. Days before the decision, the site got 100,000. “It became clear we weren’t going to be able to handle the traffic we were expecting to see when the decision was issued,” Mallory says. A week before the decision, Mallory reached out to Sound Strategies, a website optimization company that works specifically with WordPress. The Sound Strategies team worked throughout the weekend recoding the SCOTUSblog site again, installing high-end caching plugins, checking for script conflicts and cleaning out old databases from previous plugins that had been removed. The team also installed Nginx, the open source Web server, to run on the Media Temple hardware. All of the improvements helped, but when the decision did not come on Tuesday, July 26, it became clear that Thursday, July 28, the last day of the court’s term, would be decision day. Mallory was getting worried: Earlier in the week SCOTUSblog suffered a distributed denial-of-service (DDOS) attack targeting the website. That couldn’t happen on Thursday, when the court would issue the ruling. “This was our time, it just had to work,” Mallory says. The night before decision day, Mallory and Sound Strategies took drastic measures. Mallory estimated the site could see between 200,000 and 500,000 hits the next day, so the group decided to purchase four additional servers from Media Temple, which Sound Strategies configured overnight. SCOTUSblog ended up with a solution Thursday morning that had a main server acting as a centralized host of SCOTUSblog, with four satellite servers hosting cached images of the website that were updated every six minutes. A live blog providing real-time updates — which was the first to correctly report the news — was hosted by CoveritLive, a live blogging service. As 10 a.m. EDT approached, the system began being put to the test. At 10:03, the site was handling 1,000 requests per second. By 10:04 it had reached 800,000 total page views. That number climbed to 1 million by 10:10, and by 10:30 the site had received 2.4 million hits. Because of the satellite caching, Mallory says, the site was loading faster during peak traffic than it ever had before. In post-mortem reviews, Sound Strategies engineers said they found evidence of two DDoS attacks, one at 9:45 a.m. and another at 10 a.m., which the servers were able to absorb. “We built this fortress that was used basically for two hours that morning,” Mallory says. “It worked and it never slowed down.” Since the healthcare decision, SCOTUSblog has seen higher-than-normal traffic, but nowhere near the 5 million page views the site amassed on the biggest day in the blog’s history. “It was a roller coaster,” Mallory says. “You can have the best analysis, the fastest, most accurate reporting, but if your website crashes and no one can see it that moment, it doesn’t matter.” Source: http://www.arnnet.com.au/article/429473/how_legal_blog_survived_traffic_tidal_wave_after_court_healthcare_ruling/?fp=4&fpid=1090891289

Read the original post:

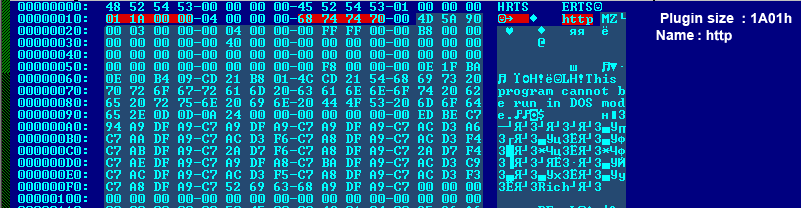

Legal blog site suffered Distributed Denial of Service ‘DDoS’ attack