Distributed denial of service attacks have morphed from a nuisance to something more sinister. In a DDoS attack, heavy volumes of traffic are hurled at a website to halt normal activity or inflict damage, typically freezing up the site for several hours. Such exploits achieved notoriety in the fall of 2012 when large banks were hit by a cyberterrorist group. But the Operation Ababil attacks were simply meant to stop banks’ websites from functioning. They caused a great deal of consternation among bank customers and the press, but little serious harm. Since then, the attacks have become more nuanced and targeted, several recent reports show. “DDoS is a growing problem, the types of attack are getting more sophisticated, and the market is attracting new entrants,” said Rik Turner, a senior analyst at Ovum, a research and consulting firm. For example, “we’re seeing lots of small attacks with intervals that allow the attackers to determine how efficiently the victims’ mitigation infrastructure is and how quickly it is kicking in,” he said. This goes for banks as much as for nonbanking entities. Verisign’s report on DDoS attacks carried out in the fourth quarter of 2014 found that the number of attacks against the financial industry doubled to account for 15% of all offensives. DDoS activity historically increases during the holiday season each year. “Cybercriminals typically target financial institutions during the fourth quarter because it’s a peak revenue and customer interaction season,” said Ramakant Pandrangi, vice president of technology at Verisign. “As hackers have become more aware of this, we anticipate the financial industry will continue to see an increase in the number of DDoS activity during the holiday season year over year.” In a related trend, bank victims are getting hit repeatedly. “If you have an organization that’s getting hit multiple times, often that’s an indicator of a very targeted attack,” said Margee Abrams, director of security services at Neustar, an information services company. According to a report Neustar commissioned and released this week, in the financial services industry, 43% of bank targets were hit more than six times during 2014. Neustar worked with a survey sampling company that gathered responses from 510 IT directors in the financial services, retail and IT services, with strong representation in financial services. (The respondents are not Neustar customers.) The average bandwidth consumed by a DDoS attack increased to 7.39 gigabits per second, according to Verisign’s analysis of DDoS attacks in the fourth quarter of 2014. This is a 245% increase from the last quarter of 2013 and it’s larger than the incoming bandwidth most small and medium-sized businesses, such as community banks, can provision. At the same time, DDoS attacks are shorter, as banks have gotten relatively adept at handling them. Most (88%) detect attacks in less than two hours (versus 77% for companies in general), according to Neustar’s research. And 72% of banks respond to attacks in that timeframe. Some recent DDoS attacks on banks have been politically motivated. Last year, a hacker group called the European Cyber Army claimed responsibility for DDoS attacks against websites run by Bank of America, JPMorgan Chase, and Fidelity Bank. Little is known about the group, but it has aligned itself with Anonymous on some attacks and seems interested in undermining U.S. institutions, including the court system as well as large banks. But while attacks from nation-states and hacktivists tend to grab headlines, it’s the stealthy, unannounced DDoS attacks, such as those against Web applications, that are more likely to gum up the works for bank websites for short periods and are in fact more numerous, Turner noted. They’re meant to test the strength of defenses or to distract the target from another type of attack. For example, a DDoS attack may be used as smokescreen for online banking fraud or some other type of financially motivated fraud. In Neustar’s study, 30% of U.S. financial services industry respondents said they suffered malware or virus installation and theft as a result of a DDoS attack. “What I hear from our clients is that DDoS is sometimes used as a method to divert security staff so that financial fraud can get through,” said Avivah Litan, vice president at Gartner. “But these occurrences seem to be infrequent.” Her colleague Lawrence Orans, a research vice president for network security at Gartner, sounded skeptical about the frequency of DDoS-as-decoy schemes. “I think there is some fear-mongering associated with linking DDoS attacks with bank fraud,” he said. However, “the FBI has issued warnings about this in the past, so there is some validity to the issue of attackers using DDoS attacks as a smokescreen to distract a bank’s security team while the attacker executes fraudulent transactions.” According to Verisign’s iDefense team, DDoS cybercriminals are also stepping up their attacks on point-of-sale systems and ATMs. “We believe this trend will continue throughout 2015 for financial institutions,” Pandrangi said. “Additionally, using an outdated operating system invites malware developers and other cyber-criminals to exploit an organization’s networks. What’s worse is that thousands of ATMs owned by the financial sector in the U.S. are running on the outdated Windows XP operating system, making it vulnerable to becoming compromised.” Six-Figure Price Tag DDoS attacks are unwelcome at any cost. Neustar’s study puts a price tag on the harm banks suffer during such attacks: $100,000 an hour for most banks that were able to quantify it. More than a third of the financial services firms surveyed reported costs of more than that. “Those losses represent what companies stand to lose during peak hours of transactions on their websites,” said Abrams. “That doesn’t even begin to cover the losses in terms of expenses going out. For example, many attacks require six to ten professionals to mitigate the attack once it’s under way. That’s a lot of salaries going out that also represent losses for the company.” Survey respondents also complained about the damage to their brand and customer trust during and after DDoS attacks. “That gets more difficult to quantify in terms of losses to an overall brand, but it’s a significant concern,” Abrams said. To some, the $100,000 figure seems high. “Banks have other channels for their customers — mainly branch, ATM and phone — so I don’t see that much revenue being lost,” said Litan. Other recent studies have also attempted to quantify the cost of a DDoS attack. A study commissioned by Incapsula surveyed IT managers from 270 North American organizations and found that the average cost of an attack was $40,000 an hour: 15% of respondents put the cost at under $5,000 an hour; 15% said it was more than $100,000. There’s no question banks have had to spend millions in aggregate to mitigate DDoS risks. “They created more headroom by buying more bandwidth and by scaling the capacity of their web infrastructure — for example, by buying more powerful web servers,” said Orans. “And they continue to spend millions on DDoS mitigation services. That’s where the real pain has been — the attackers forced the banks to spend a lot of money on DDoS mitigation.” Source: http://www.americanbanker.com/news/bank-technology/banks-lose-up-to-100khour-to-shorter-more-intense-ddos-attacks-1073966-1.html?zkPrintable=1&nopagination=1

Taken from:

Banks Lose Up to $100K/Hour to Shorter, More Intense DDoS Attacks

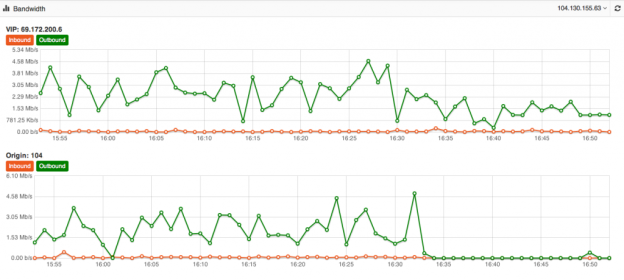

Its one of the biggest mysteries to me I have seen in my 15+ years of Internet hosting and cloud based services. The mystery is, why do people use a Content Delivery Network for their website yet never fully optimize their site to take advantage of the speed and volume capabilities of the CDN. Just because you use a CDN doesn’t mean your site is automatically faster or even able to take advantage of its ability to dish out mass amounts of content in the blink of an eye. At DOSarrest I have seen the same mystery continue, this is why I have put together this piece on using a CDN and hopefully help those who wish to take full advantage of a CDN. Most of this information is general and can be applied to using any CDN but I’ll also throw in some specifics that relate to DOSarrest. Some common misconceptions about using a CDN As soon as I’m configured to use a CDN my site will be faster and be able to handle a large amount of web visitors on demand. Website developers create websites that are already optimized and a CDN won’t really change much. There’s really nothing I can do to make my website run faster once its on a CDN. All CDN’s are pretty much the same. Here’s what I have to say about the misconceptions noted above In most cases the answer to this is…. NO !! If the CDN is not caching your content your site won’t be faster, in fact it will probably be a little slower, as every request will have to go from the visitor to the CDN which will in turn go and fetch it from your server then turn around and send the response back to the visitor. In my opinion and experience website developers in general do not optimize websites to use a CDN. In fact most websites don’t even take full advantage of a browsers’ caching capability. As the Internet has become ubiquitously faster, this fine art has been left by the wayside in most cases. Another reason I think this has happened is that websites are huge, complex and a lot of content is dynamically generated coupled with very fast servers with large amounts of memory. Why spend time on optimizing caching, when a fast server will overcome this overhead. Oh yes you can and that’s why I have written this piece…see below No they aren’t. Many CDN’s don’t want you know how things are really working from every node that they are broadcasting your content from. You have to go out and subscribe to a third party service, if you have to get a third party service, do it, it can be fairly expensive but well worth it. How else will you know how your site is performing from other geographic regions. A good CDN should let you know the following in real-time but many don’t. Number of connections/requests between the CDN and Visitors. Number of connections/requests between the CDN and your server (origin). You want try and have the number of requests to your server to be less than the number of requests from the CDN to your visitors. *Tip- Use HTTP 1.1 on both “a” & “b” above and try and extend the keep-alive time on the origin to CDN side Bandwidth between the CDN and Internet visitors Bandwidth between the CDN and your server (origin) *Tip – If bandwidth of “c” and “d” are about the same, news flash…You can make things better. Cache status of your content (how many requests are being served by the CDN) *Tip – This is the best metric to really know if you are using your CDN properly. Performance metrics from outside of the CDN but in the same geographic region *Tip- Once you have the performance metrics from several different geographic regions you can compare the differences once you are on a CDN, your site should load faster the further away the region is located from your origin server, if you’re caching properly. For the record DOSarrest provides all of the above in real-time and it’s these tools I’ll use to explain on how to take full advantage of any CDN but without any metrics there’s no scientific way to know you’re on the right track to making your site super fast. There are five main groups of cache control tags that will effect how and what is cached. Expires : When attempting to retrieve a resource a browser will usually check to see if it already has a copy available for reuse. If the expires date has past the browser will download the resource again. Cache-control : HTTP 1.1 this expands on the functionality offered by Expires. There are several options available for the cache control header: – Public : This resource is cacheable. In the absence of any contradicting directive this is assumed. – Private : This resource is cachable by the end user only. All intermediate caching devices will treat this resource as no-cache. – No-cache : Do not cache this resource. – No-store : Do not cache, Do not store the request, I was never here – we never spoke. Capiche? – Must-revalidate : Do not use stale copies of this resource. – Proxy-revalidate : The end user may use stale copies, but intermediate caches must revalidate. – Max-age : The length of time (in seconds) before a resource is considered stale. A response may include any combination of these headers, for example: private, max-age=3600, must-revalidate. X-Accel-Expires : This functions just like the Expires header, but is only intended for proxy services. This header is intended to be ignored by browsers, and when the response traverses a proxy this header should be stripped out. Set-Cookie : While not explicitly specifying a cache directive, cookies are generally designed to hold user and/or session specific information. Caching such resources would have a negative impact on the desired site functionality. Vary : Lists the headers that should determine distinct copies of the resource. Cache will need to keep a separate copy of this resource for each distinct set of values in the headers indicated by Vary. A Vary response of “ * “ indicates that each request is unique. Given that most websites in my opinion are not fully taking advantage of caching by a browser or a CDN, if you’re using one, there is still a way around this without reviewing and adjusting every cache control header on your website. Any CDN worth its cost as well as any cloud based DDoS protection services company should be able to override most website cache-control headers. For demonstration purposes we used our own live website DOSarrest.com and ran a traffic generator so as to stress the server a little along with our regular visitor traffic. This demonstration shows what’s going on, when passing through a CDN with respect to activity between the CDN and the Internet visitor and the CDN and the customers server on the back-end. At approximately 16:30 we enabled a feature on DOSarrest’s service we call “Forced Caching” What this does is override in other words ignore some of the origin servers cache control headers. These are the results: Notice that bandwidth between the CDN and the origin (second graph) have fallen by over 90%, this saves resources on the origin server and makes things faster for the visitor. This is the best graphic illustration to let you know that you’re on the right track. Cache hits go way up, not cached go down and Expired and misses are negligible. The graph below shows that the requests to the origin have dropped by 90% ,its telling you the CDN is doing the heavy lifting. Last but not least this is the fruit of your labor as seen by 8 sensors in 4 geographic regions from our Customer “ DEMS “ portal. The site is running 10 times faster in every location even under load !

Its one of the biggest mysteries to me I have seen in my 15+ years of Internet hosting and cloud based services. The mystery is, why do people use a Content Delivery Network for their website yet never fully optimize their site to take advantage of the speed and volume capabilities of the CDN. Just because you use a CDN doesn’t mean your site is automatically faster or even able to take advantage of its ability to dish out mass amounts of content in the blink of an eye. At DOSarrest I have seen the same mystery continue, this is why I have put together this piece on using a CDN and hopefully help those who wish to take full advantage of a CDN. Most of this information is general and can be applied to using any CDN but I’ll also throw in some specifics that relate to DOSarrest. Some common misconceptions about using a CDN As soon as I’m configured to use a CDN my site will be faster and be able to handle a large amount of web visitors on demand. Website developers create websites that are already optimized and a CDN won’t really change much. There’s really nothing I can do to make my website run faster once its on a CDN. All CDN’s are pretty much the same. Here’s what I have to say about the misconceptions noted above In most cases the answer to this is…. NO !! If the CDN is not caching your content your site won’t be faster, in fact it will probably be a little slower, as every request will have to go from the visitor to the CDN which will in turn go and fetch it from your server then turn around and send the response back to the visitor. In my opinion and experience website developers in general do not optimize websites to use a CDN. In fact most websites don’t even take full advantage of a browsers’ caching capability. As the Internet has become ubiquitously faster, this fine art has been left by the wayside in most cases. Another reason I think this has happened is that websites are huge, complex and a lot of content is dynamically generated coupled with very fast servers with large amounts of memory. Why spend time on optimizing caching, when a fast server will overcome this overhead. Oh yes you can and that’s why I have written this piece…see below No they aren’t. Many CDN’s don’t want you know how things are really working from every node that they are broadcasting your content from. You have to go out and subscribe to a third party service, if you have to get a third party service, do it, it can be fairly expensive but well worth it. How else will you know how your site is performing from other geographic regions. A good CDN should let you know the following in real-time but many don’t. Number of connections/requests between the CDN and Visitors. Number of connections/requests between the CDN and your server (origin). You want try and have the number of requests to your server to be less than the number of requests from the CDN to your visitors. *Tip- Use HTTP 1.1 on both “a” & “b” above and try and extend the keep-alive time on the origin to CDN side Bandwidth between the CDN and Internet visitors Bandwidth between the CDN and your server (origin) *Tip – If bandwidth of “c” and “d” are about the same, news flash…You can make things better. Cache status of your content (how many requests are being served by the CDN) *Tip – This is the best metric to really know if you are using your CDN properly. Performance metrics from outside of the CDN but in the same geographic region *Tip- Once you have the performance metrics from several different geographic regions you can compare the differences once you are on a CDN, your site should load faster the further away the region is located from your origin server, if you’re caching properly. For the record DOSarrest provides all of the above in real-time and it’s these tools I’ll use to explain on how to take full advantage of any CDN but without any metrics there’s no scientific way to know you’re on the right track to making your site super fast. There are five main groups of cache control tags that will effect how and what is cached. Expires : When attempting to retrieve a resource a browser will usually check to see if it already has a copy available for reuse. If the expires date has past the browser will download the resource again. Cache-control : HTTP 1.1 this expands on the functionality offered by Expires. There are several options available for the cache control header: – Public : This resource is cacheable. In the absence of any contradicting directive this is assumed. – Private : This resource is cachable by the end user only. All intermediate caching devices will treat this resource as no-cache. – No-cache : Do not cache this resource. – No-store : Do not cache, Do not store the request, I was never here – we never spoke. Capiche? – Must-revalidate : Do not use stale copies of this resource. – Proxy-revalidate : The end user may use stale copies, but intermediate caches must revalidate. – Max-age : The length of time (in seconds) before a resource is considered stale. A response may include any combination of these headers, for example: private, max-age=3600, must-revalidate. X-Accel-Expires : This functions just like the Expires header, but is only intended for proxy services. This header is intended to be ignored by browsers, and when the response traverses a proxy this header should be stripped out. Set-Cookie : While not explicitly specifying a cache directive, cookies are generally designed to hold user and/or session specific information. Caching such resources would have a negative impact on the desired site functionality. Vary : Lists the headers that should determine distinct copies of the resource. Cache will need to keep a separate copy of this resource for each distinct set of values in the headers indicated by Vary. A Vary response of “ * “ indicates that each request is unique. Given that most websites in my opinion are not fully taking advantage of caching by a browser or a CDN, if you’re using one, there is still a way around this without reviewing and adjusting every cache control header on your website. Any CDN worth its cost as well as any cloud based DDoS protection services company should be able to override most website cache-control headers. For demonstration purposes we used our own live website DOSarrest.com and ran a traffic generator so as to stress the server a little along with our regular visitor traffic. This demonstration shows what’s going on, when passing through a CDN with respect to activity between the CDN and the Internet visitor and the CDN and the customers server on the back-end. At approximately 16:30 we enabled a feature on DOSarrest’s service we call “Forced Caching” What this does is override in other words ignore some of the origin servers cache control headers. These are the results: Notice that bandwidth between the CDN and the origin (second graph) have fallen by over 90%, this saves resources on the origin server and makes things faster for the visitor. This is the best graphic illustration to let you know that you’re on the right track. Cache hits go way up, not cached go down and Expired and misses are negligible. The graph below shows that the requests to the origin have dropped by 90% ,its telling you the CDN is doing the heavy lifting. Last but not least this is the fruit of your labor as seen by 8 sensors in 4 geographic regions from our Customer “ DEMS “ portal. The site is running 10 times faster in every location even under load !

Hacktivists have launched a distributed denial-of-service attack against the website of TMT (Thirty Meter Telescope), which is planned to be the Northern hemisphere’s largest, most advanced optical telescope. For at least two hours yesterday, the TMT website at www.tmt.org was inaccessible to internet users. Sandra Dawson, a spokesperson for the TMT project, confirmed to the Associated Press that the site had come under attack: “TMT today was the victim of an unscrupulous denial of service attack, apparently launched by Anonymous. The incident is being investigated.” You might think that a website about a telescope is a strange target for hackers wielding the blunt weapon of a DDoS attack, who might typically be more interested in attacking government websites for political reasons or taking down an unpopular multinational corporation. Why would hackers want to launch such a disruptive attack against a telescope website? Surely the only people who don’t like telescopes are the aliens in outer space who might be having their laundry peeped at from Earth? It turns out there’s a simple reason why the Thirty Meter Telescope is stirring emotions so strongly: it hasn’t been built yet. The construction of the proposed TMT is controversial because it is planned to be be constructed on Mauna Kea, a dormant 13,796 foot-high volcano in Hawaii. This has incurred the wrath of environmentalists and native Hawaiians who consider the land to be sacred. There has been considerable opposition to the building of the telescope on Mauna Kea, as this news report from last year makes clear. Now it appears the protest about TMT has spilt over onto the internet in the form of a denial-of-service attack. Operation Green Rights, an Anonymous-affiliated group which also campaigns against controversial corporations such as Monsanto, claimed on its Twitter account and website that it was responsible for the DDoS attack. The hacktivists additionally claimed credit for taking down Aloha State’s official website. It is clear that denial-of-service attacks are being deployed more and more, as perpetrators attempt to use the anonymity of the internet to hide their identity and stage the digital version of a “sit down protest” or blockade to disrupt organisations. Tempting as it may be to participate in a DDoS attack, it’s important that everyone remembers that if the authorities determine you were involved you can end up going to jail as a result. Peaceful, law-abiding protests are always preferable. Source: http://www.welivesecurity.com/2015/04/27/tmt-website-ddos/

Hacktivists have launched a distributed denial-of-service attack against the website of TMT (Thirty Meter Telescope), which is planned to be the Northern hemisphere’s largest, most advanced optical telescope. For at least two hours yesterday, the TMT website at www.tmt.org was inaccessible to internet users. Sandra Dawson, a spokesperson for the TMT project, confirmed to the Associated Press that the site had come under attack: “TMT today was the victim of an unscrupulous denial of service attack, apparently launched by Anonymous. The incident is being investigated.” You might think that a website about a telescope is a strange target for hackers wielding the blunt weapon of a DDoS attack, who might typically be more interested in attacking government websites for political reasons or taking down an unpopular multinational corporation. Why would hackers want to launch such a disruptive attack against a telescope website? Surely the only people who don’t like telescopes are the aliens in outer space who might be having their laundry peeped at from Earth? It turns out there’s a simple reason why the Thirty Meter Telescope is stirring emotions so strongly: it hasn’t been built yet. The construction of the proposed TMT is controversial because it is planned to be be constructed on Mauna Kea, a dormant 13,796 foot-high volcano in Hawaii. This has incurred the wrath of environmentalists and native Hawaiians who consider the land to be sacred. There has been considerable opposition to the building of the telescope on Mauna Kea, as this news report from last year makes clear. Now it appears the protest about TMT has spilt over onto the internet in the form of a denial-of-service attack. Operation Green Rights, an Anonymous-affiliated group which also campaigns against controversial corporations such as Monsanto, claimed on its Twitter account and website that it was responsible for the DDoS attack. The hacktivists additionally claimed credit for taking down Aloha State’s official website. It is clear that denial-of-service attacks are being deployed more and more, as perpetrators attempt to use the anonymity of the internet to hide their identity and stage the digital version of a “sit down protest” or blockade to disrupt organisations. Tempting as it may be to participate in a DDoS attack, it’s important that everyone remembers that if the authorities determine you were involved you can end up going to jail as a result. Peaceful, law-abiding protests are always preferable. Source: http://www.welivesecurity.com/2015/04/27/tmt-website-ddos/